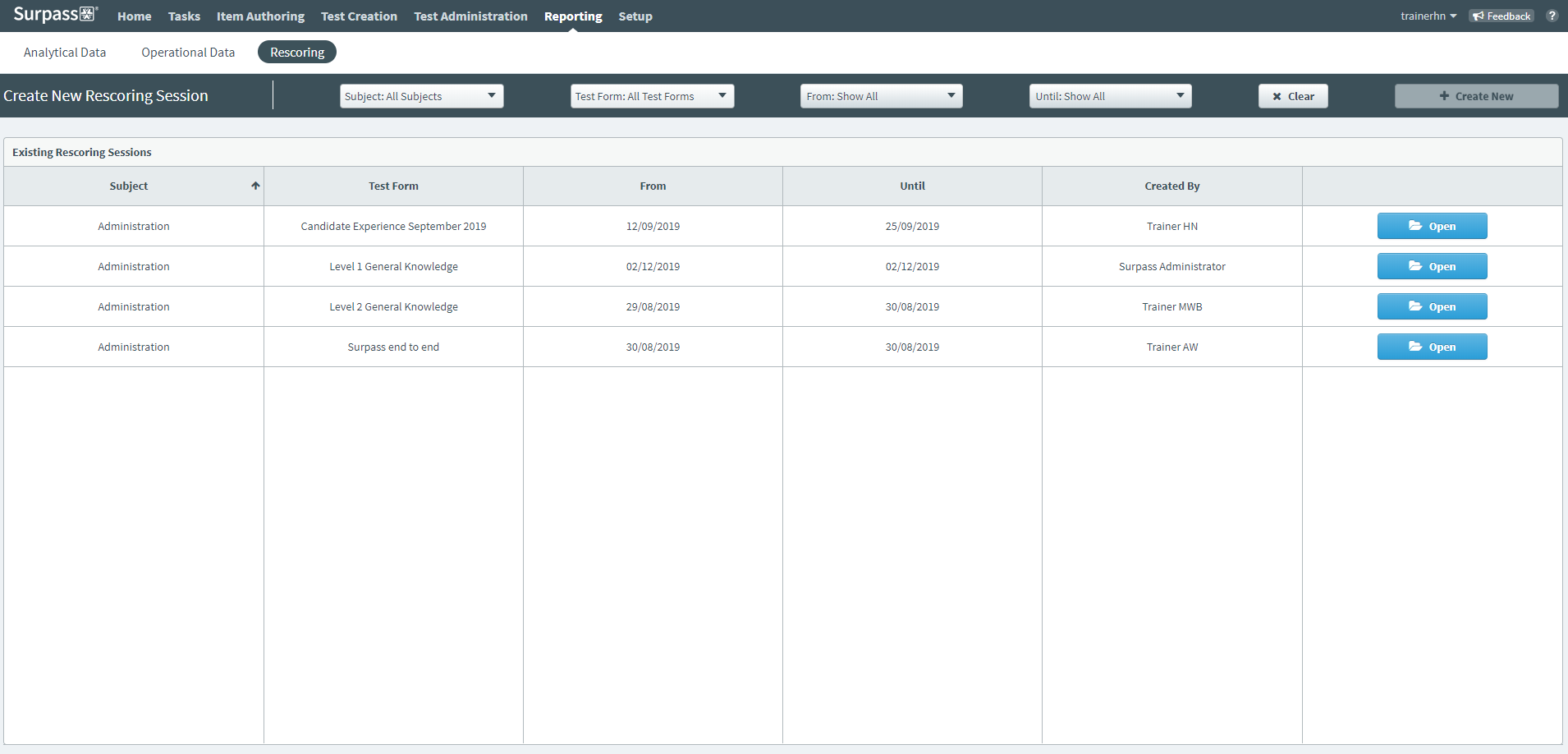

About Rescoring

In Surpass, the Rescoring screen is where you can reduce the impact of incorrect and invalid items. In a rescoring session, you can award full marks to items, add and remove scores, and change the correct answer options.

This article explains how to navigate the Rescoring screen, including how to create and work in a rescoring session.

In This Article

Navigating to the Rescoring screen

To view the Rescoring screen, go to Reporting > Rescoring.

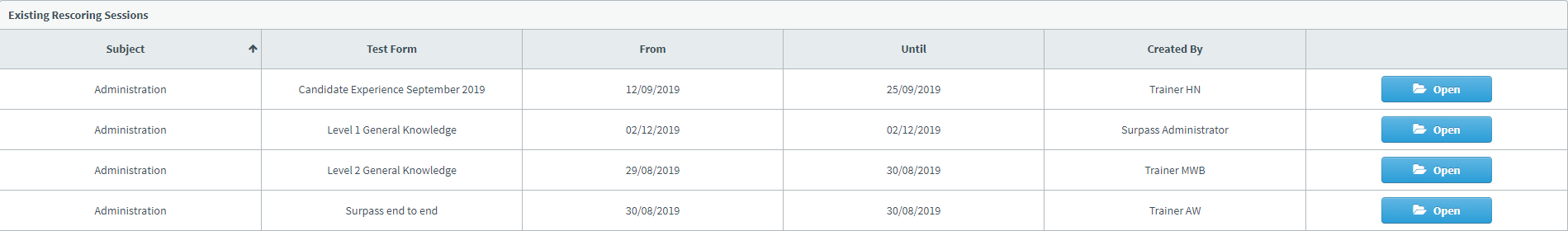

About the Rescoring table

The Rescoring grid lists all existing rescoring sessions you have access to. Refer to the following table for more information on each column.

| Column | Description |

|---|---|

| Subject | Displays the name of the subject that contains the test you want to rescore. |

| Test Form | Displays the name of the test form that contains the items you want to rescore. |

| From | Displays the earliest date on which the test was sat. |

| Until | Displays the latest date on which the test was sat. |

| Created By | Displays the name of the user who created the rescoring session. |

| Open | Opens the chosen rescoring session. |

Finding a rescoring session

By default, the Rescoring table displays all current rescoring sessions, ordered by the most recent test session. You can sort the information displayed in the Rescoring table.

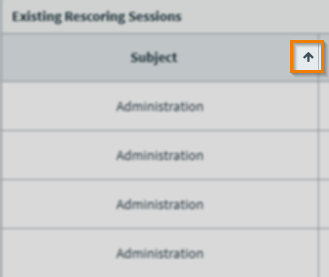

Sorting rescoring sessions

Select a column header to sort the cell data alphabetically or numerically.

The arrow icon on the column header represents whether the information in the column is sorted in ascending () or descending () order. You can toggle between ascending or descending order by selecting the column header.

Creating a rescoring session

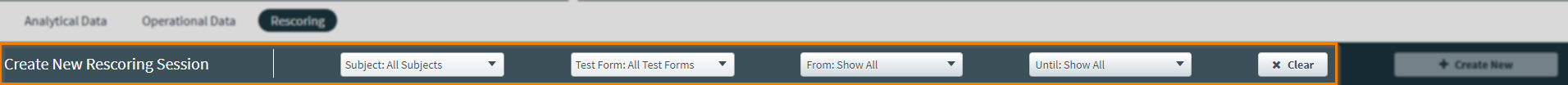

Use the filters in the Rescoring screen to search for the test session that contains the items you want to rescore. You must select a date range that contains a test session. For full instructions on how to create a new rescoring session, read Creating a rescoring session.

Working in a rescoring session

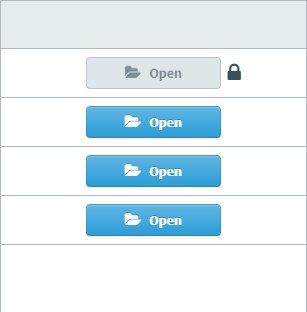

Rescoring sessions can only be edited by one user at a time. When a user is working in a rescoring session, Open is unavailable and cannot be selected until that user has finished.

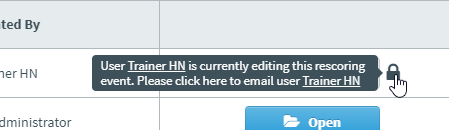

Hover over the padlock () to view the name of the user currently working in the rescoring session. If you want to ask the user to leave the rescoring session, select the user’s name to send them an email notification.

Actions on the Edit screen in a rescoring session

There are many actions you can take on the Edit screen of a rescoring session. Refer to the following grid for more information on each option.

| Setting | Description |

|---|---|

| Change Answer | Allows users to change the answer option marked as correct on an item. For more information, read Changing answers in Rescoring.

NOTE: You can only change answers for Multiple Choice, Multiple Response, and Either/Or items.

|

| Full Marks | Allows users to award full marks to computer-marked items. For more information, read Awarding full marks in Rescoring. |

| Add Item Score | Allows users to add a score to a non-scored item. For more information, read Adding and removing a score in Rescoring. |

| Remove Item Score | Allows users to remove a score from a scored item. For more information, read Adding and removing a score in Rescoring. |

| Revert Item | Allows users to revert the changes made on items in Rescoring. For more information, read Reverting changes in Rescoring. |

| Rescoring History | Allows users to view a history of the changes made to items in Rescoring. |

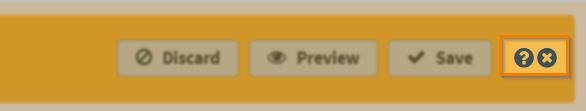

| Discard Changes | Discards changes made to an item in Rescoring. |

| Preview Changes | Previews changes made to an item in Rescoring. |

| Save Changes |

Saves changes made to an item in Rescoring.

NOTE: Changes made to items must be previewed before they can be saved.

|

Select Help to access the inbuilt Surpass help documentation. Select Close to exit the rescoring session and return to the Rescoring screen.

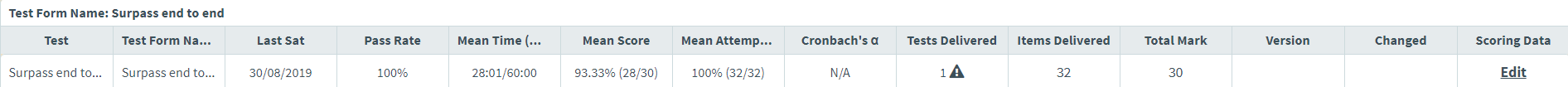

About the Test Forms table

The Test Forms grid lists information about the chosen test form in your rescoring session. Each row is dedicated to a single test form. Refer to the following table for information on each column.

| Column | Description |

|---|---|

| Test | Displays the name of the test form’s parent test. |

| Test Form Name | Displays the name of the test form. |

| Last Sat | Displays the date on which the test form was last taken by a candidate. |

| Pass Rate | Expresses how many candidates have passed the test form as a percentage. |

| Mean Time | Indicates the average time (in minutes) it took candidates to submit the test against the total allowed test time. |

| Mean Score | Expresses the average score achieved by all candidates as a percentage. The raw average score is also displayed against the total available marks in brackets. |

| Mean Attempted | Expresses the average number of items attempted by all candidates as a percentage. The raw average number of attempted items is also displayed against the total number of items in brackets. |

| Cronbach’s α | Expresses the estimated reliability of the test form. Cronbach’s α can take any value less than or equal to 1, including negative values. The closer Cronbach’s α is to 1, the more reliable the test form is estimated to be. |

| Tests Delivered |

Displays the number of tests delivered to candidates. Select the number to view the Tests Delivered report. For more information, read Viewing Tests Delivered reports. |

| Items Delivered |

Displays the number of items in the test form that have been delivered to candidates. Select the number to view the Items Delivered report. For more information, read Viewing Items Delivered reports. |

| Total Mark | Displays the total mark available for the test form version. |

| Version | Displays the test form’s version number. |

| Changed | Displays whether the item has been changed in Rescoring. |

| Scoring Data | Allows users to edit grade boundaries. Select Edit to open the Scoring Data panel. For more information, read Editing scoring data in Rescoring. |

About the Items Delivered table

The Items Delivered table displays all items in the chosen test form. Each row is dedicated to a single item. Refer to the following grid for information on each column.

| Column | Description |

|---|---|

| Item Position in Test |

Displays the item’s position in the test form. This is not applicable if items are randomised or chosen by dynamic rules.

NOTE: By default, the table is sorted by Item Position in Test in ascending order.

|

| Item Name (ID) | Displays the item’s name. The item’s unique ID code is displayed in brackets. |

| Mean Mark / Max Mark Available | Displays the average mark achieved on the item and the item’s maximum possible mark. |

| Item Exposure | Displays the number of times the item has been shown to candidates in a test. |

| Attempted | Indicates how many candidates attempted the item as a percentage. |

| Viewed Only | Indicates how many candidates only viewed the item (and did not attempt a response) as a percentage. |

| Not Viewed | Indicates how many candidates did not see the item as a percentage. |

| Avg. Response Time (Seconds) | Displays candidates’ average response time on the item in seconds. |

| P Value |

Displays the item’s probability value. A P value is a statistical representation of item difficulty calculated by dividing the number of times an item has been answered correctly by the number of times the item has been attempted. P values are represented as a decimal number between 0 and 1. The closer the P value is to 1, the easier the item is considered to be. If an item is answered correctly nine times out of ten, it will have a P value of 0.9. This could be considered an easy item. If an item is answered correctly five times out of ten, it will have a P value of 0.5. This could be considered a medium item. If an item is answered correctly two times out of ten, it will have a P value of 0.2. This could be considered a hard item. |

| R Value |

Displays the item’s R value (also known as the Pearson product moment correlation coefficient). The R value measures the correlation between the item score and the test score. R values range between -1 and +1. A positive R value (0.1 to 1) indicates a positive correlation between candidates’ item scores and test scores. A neutral R value (0) indicates no correlation between candidates’ item scores and test scores. A negative R value (-1 to -0.1) indicates a negative correlation between candidates’ item scores and test scores.

IMPORTANT: R values are only populated for non-dichotomously scored items. Non-dichotomously scored items can have multiple outcomes. Some examples of non-dichotomously scored items would be an Essay item worth ten marks and a Multiple Response item with four correct answer options worth four marks, where one mark is awarded for each correct answer option.

|

| Point Biserial |

Displays the item’s point biserial correlation coefficient. This is a special version of the Pearson R value that only applies to dichotomously scored items. Point biserial values range between -1 and +1. A positive point biserial value (0.1 to 1) indicates a positive correlation between candidates’ item scores and test scores. A neutral point biserial value (0) indicates no correlation between candidates’ item scores and test scores. A negative point biserial value (-1 to -0.1) indicates a negative correlation between candidates’ item scores and test scores.

IMPORTANT: Point biserial values are only populated for dichotomously scored items. Dichotomously scored items only have two outcomes (correct and incorrect). An example of a dichotomously scored item would be a Multiple Choice item worth one mark.

|

| DI |

Displays the item’s discrimination index (DI). A discrimination index indicates the extent to which an item is able to successfully discriminate high-performing candidates from low-performing candidates. If more high-performing candidates get an item right than low-performing candidates, the item positively discriminates and has a DI between 0 and 1. An item with a positive DI generally supports for the assessment qualities of the test. If more low-performing candidates get an item right than high-performing candidates, the item negatively discriminates and has a DI between -1 and 0. An item with a negative DI can compromise the integrity of the test. |

| Scored |

Indicates whether the candidate’s performance on the item contributed to their total mark for the test.

NOTE: You are informed if an item has been used as both a scored and non-scored item in multiple test forms during the specified date range.

|

| Changed |

Displays whether the item has been changed in Rescoring. |

| Version |

Displays the item’s version number. If multiple item versions have been delivered to candidates, you are able to view each version’s data. By default, the Items Delivered report displays the most recent version. |

Editing scoring data

You can change grade boundaries and edit Learning Outcome boundaries in the Scoring Data column of the Test table. For more information, read Editing scoring data in Rescoring.

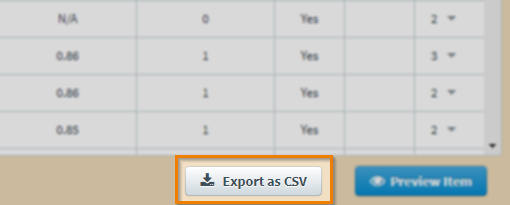

Exporting a rescoring session

Select Export as CSV to export the data displayed in the Items Delivered table.

Further reading

To learn more about the Rescoring screen, read the following articles to learn more: